Throwing a die for a cookie; Acceptance-rejection policies

Table of Contents

1. Introduction

We have a class with \(N=19\) children and one biscuit. We would like to select one child to give the biscuit, but fate should decide which child. Can we use a fair six-sided die to select a winner, with multiple throws, such that all children have an equal probability of winning? And if so, what strategy would be best, in the sense that it minimizes the expected number of throws?

To answer these questions, we start with the analysis of simple cases to learn, hopefully, how to handle the case with \(19\) children. In this process we design a simple and intuitive strategy. As this policy is somehwat hard to analyze mathematically, we'll use simulation to assess its quality. It turns out that this policy is unfair: some children will have a larger probability of winning than others. Then we retract a bit and provide a better algorithm, which is fair and optimal in the sense that is requires the least number of throws on average to select the winner. {{{sidenote{(I discussed this problem with Henk Tijms and he pointed out an answer on Quora on how to handle a class with 20 pupils. Bases on this, I generalized this answer to an algorithm that can deal with an arbitrary number of children.)}}}

In this section we introduce some general concepts that are generally useful. The first is recursion, the second is to use acceptance-rejection policies to decide whether a game should continue or stop.

2. A general approach

The first aim is to find a policy that selects in a fair way a winner. But before embarking on how to do this for general classes, we start with considering some simple problems. Perhaps we spot a pattern!

Obviously, when there is \(N=1\) child, there is no need to choose. The next simplest case is when we have \(N=6\) children: number the children from 1 to 6, and throw the die.

What strategy would you use for \(N=2\) children?

Solution

Throw the die. If the outcome is in \(\{1, 2, 3\}\) give the biscuit to the first child, otherwise to the second.

What strategy would you use for \(N=3\)?

Solution

If the outcome is in \(\{1, 2\}\), give the biscuit to the first child; if in \(\{3, 4\}\) to the second; otherwise to the third.

When \(N=4\), we can use the following policy. Throw the die for each child. Each child whose outcome is below \(5\) `survives' to the next round. When there are no survivors after the first round (all threw \(5\) or \(6\) ) let them all throw once again. When there are \(1\), \(2\), or \(3\) survivors, use the suitable strategy we developed above. Thus, the idea of this algorithm is like this: use the die to reduce the group such that a set of children remains for which we already have a policy. However, while this is a fool-proof strategy, it requires at least \(4\) throws. We'd better search for more efficient policies.

For \(N=4\), find a policy that uses precisely two throws of the die to select a child.

Solution

Throw once. If the outcome is in \(\{1,2,3\}\), then we will use the second throw to give the cookie to either child \(1\) or \(2\). Instead, if the outcome is in \(\{4,5,6\}\), then we will use the second throw to give the cookie to either child \(3\) or \(4\).

Note that the first throw splits the group into two subgroups \(\{1,2\}\) and \(\{3, 4\}\) of the same size. Then we have two smaller groups, and for these smaller group we already know how to find a fair solution. Clearly, the pattern of recursion is to try to express the solution in terms of solutions of problems we solved earlier.

For \(N=4\) we can also keep on throwing until a child is selected. How would such a strategy work?

Solution

Throw the die once. If the outcome is in \(\{1, 2, 3, 4\}\), there is a winner. Otherwise, when the outcome is \(5\) or \(6\), throw again, and continue throwing until the outcome \(X\in \{1, 2, 3, 4\}\).

This second strategy is known as an acceptance-rejection policy: accept an outcome of an experiment when the outcome lies in a certain subset of the state space, otherwise reject the outcome, and sample again.1

We can compute the expected number of throws for the second policy with, so-called, first-step analysis. Write \(Y\) for the number of throws we need for the die to hit the set \(\{1,2,3,4\}\). The probability to hit this set is \(p=2/3\), while the probability to hit \(\{5,6\}\) is \(q=1-p\).

If \(\E Y\) is the expected number of throws until we are successful, explain that it must satisfy the relation \(\E Y = 1 + q \E Y\).

Solution

We need at least one throw. In case of a success, we can stop. In case of a failure, which happens with probability \(q\), we need another throw.

In probability terms, we say that \(Y\) has the first success distribution with failure probablity \(q\). This is rv is similar to a geometric rv, but slightly different. The first success rv counts the number of throws up and including the number of throws until a success; the geometric rv counts the number of failures until the success, so one less.2 From this,3

\begin{equation*} \E Y = 1 + q \E Y \implies (1-q) \E Y = 1 \implies \E Y = \frac{1}{1-q} = \frac{1}{p}. \end{equation*}So, for \(N=4\), \(\E Y = 3/2\).

Interestingly, the first strategy, based on recursion, always needs exactly \(2\) throws. Instead, the acceptance-rejection method requires less throws on average, but there is no formal bound on \(Y\). We remark in passing an important take away in the design of random algorithms. Sometimes it is required that an algorithm finishes in a guaranteed fixed amount time, rather than that it finishes fast. When time constraints are relevant, it's good to have a deterministic algorithm, even though it can be slower on average.

What is the performance of the acceptance-rejection strategy for \(N=5\)?

Solution

The success probability \(p=5/6\), hence \(\E Y = 6/5\).

For \(N=7\), design an acceptance-rejection algorithm that uses rounds, each consisting of two throws.

Solution

We need at least one round of two throws. Let's make the acceptance region as large as possible so that the probability of rejection set is as small as possible. As \(7\times 5 = 35\), we should chop up the sample space in \(7\) non-overlapping sets of equal size. Let \(X_1\) and \(X_2\) be the outcomes of the first and second throw, respectively. When \(X_1=1, X_2\leq 5\), that is, when the outcome of both throws lies in \(\{(1,1), (1,2), (1,3), (1,4), (1,5)\}\), child 1 wins. When \(X_1=2, X_2 \leq 5\), child 2 wins, and so on until child 6. For child \(7\), take the associated set such that \(X_1 \leq 5, X_2 = 6\). Observe that these events don't overlap. Finally, if the outcome is \((6,6)\), rethrow the die.

For \(N=7\), what is the expected number of throws for this acceptance-rejection policy?

Solution

With success probability \(p=35/36\), one of the children is selected. If the outcome is \((6,6)\) we need another round of two throws. The expected number of rounds is \(1/p=36/35\). Since each round contains two throws, the number of throws is \(\E Y = 2/p = 72/35\), which is nearly \(2\).

For \(N=19\), what is the expected number of throws under an acceptance-rejection policy similar to the one of 1?

Solution

Throw twice. Assign student one to \((1,1)\), student two to \((1,2)\), \ldots, student \(19\) to \((4,1)\). Either a student is selected by the pair of throws, with success probability \(p=19/36\), or none is selected and we throw twice again. Then \(\E Y = 2/p = 72/19 \approx 4\).

Why is this form of acceptance-rejection much more efficient for classes of size \(N=7\) than when \(N=19\)?

Solution

For \(N=7\) we can cover the sample space nearly, the rejection set contains just one element. However, for \(N=19\), the cover of the state space with 36 outcomes is maximally inefficient.

Use the idea of the previous exercise to design a policy that needs slightly over 3 throws on average to select a winner for \(N=19\).

Solution

Take rounds, each with three throws, to get a sample space of size \(6^{3} = 216\) states. Split this into 19 subsets of equal size to guarantee that all children have the same probability to win, but such that the rejection is minimal. Since \(19\times 11 = 209\) each subset should contain 11 samples. For instance, for child 1 we can take \((1,1,1), (1,1,2), \ldots, (1, 2, 5)\).

The rejection set, i.e., the number of states not assigned to any child, is \(216-209=7\). Thus, the probability to need another rounds is \(7/209\), and the expected number of rounds is \(216/209\). Hence, the expected number of throws \(\E Y = 3 \times 216/209 \approx 3\).

Observe that with the strategy discussed in 1 for \(N=19\), we always have to throw at least three times. However, the strategy of 1 sometimes completes in just two throws. Might there be a optimal policy that needs somewhere between \(2\) and \(3\) throws on average?

3. An appealing, but wrong, strategy

One promising idea to improve on the strategy of using complete rounds of two throws for \(N=19\) is as follows. If there is no winner after the first and second throw, just throw the die a third time. Now check whether the result of the second and third throw is in the list of winning outcomes. To illustrate, if \((X_1, X_2) = (4, 3)\), then, by 1, the outcome \((4,3)\) is not a winning sequence, so that we have to continue with a third throw. After seeing \(X_3=4\), there is winning sequence, since \((X_2, X_3) = (3,4)\), which corresponds to child \((3-1)\cdot 6 + 4 = 16\). If, however, \((X_1, X_2, X_3) = (4, 5, 1)\) there is not yet a winner, because neither \((4,5)\) nor \((5,1)\) correspond to a child. Supposing that \(X_{4}=3\), the result of the last two throws is \((X_3, X_4) = (1, 3)\) so child \(3\) wins.

Notwithstanding that this is an appealing strategy, I was not convinced that this strategy will result in all children having an equal probability to win. The reason is that it reminded me of the, so-called, `monkey-typing problem'. In a later chapter we will explore this problem, but since its analysis requires some new mathematical ideas, we will use here simulation to see how our this new `monkey' policy performs when \(N=19\).

Before we discuss the code below, you should know that in Python (like in C and many other programming languages) arrays start at index 0. This will turn out very practical later in this chapter when we use modulo computions. Hence, in all maths and the code below, children start couting at \(0\), and if we have, for example \(4\) children, the associated set will be \(\{0, 1, 2, 3\}\), and not \(\{1, 2, 3, 4\}\).

The main parts of the next python code work as follows.

The tuple last_two corresponds to the outcomes of the last two throws.

The rejection set reject contains the tuples that do not correspond to a winner, hence require at least one further throw; hence, reject contains the outcome pairs \((3,1), \ldots, (5,5)\).

If last_two is an element of reject, we throw the die again and update last_two to include the last throw.

We continue with throwing until last_two is accepted.

Once we have a winner, we add one win for this child.

Finally, the count object counts how often each outcome occurs.4

import collections

import itertools

import numpy as np

rng = np.random.default_rng(1)

reject = set().union((3, i) for i in range(1, 6))

for i, j in itertools.product([4, 5], range(6)):

reject.add((i, j))

count = collections.Counter()

num_runs = 100_000

for _ in range(num_runs):

last_two = (rng.integers(6), rng.integers(6))

while last_two in reject:

last_two = (last_two[1], rng.integers(6))

count[last_two] += 1

most = count.most_common()[0][1]

least = count.most_common()[-1][1]

mean = num_runs // 19

print(f"{len(reject)=}, {len(count)=}")

print(f"{least=}, {mean=}, {most=}, {count.total()=}")

len(reject)=17, len(count)=19 least=4464, mean=5263, most=5677, count.total()=100000

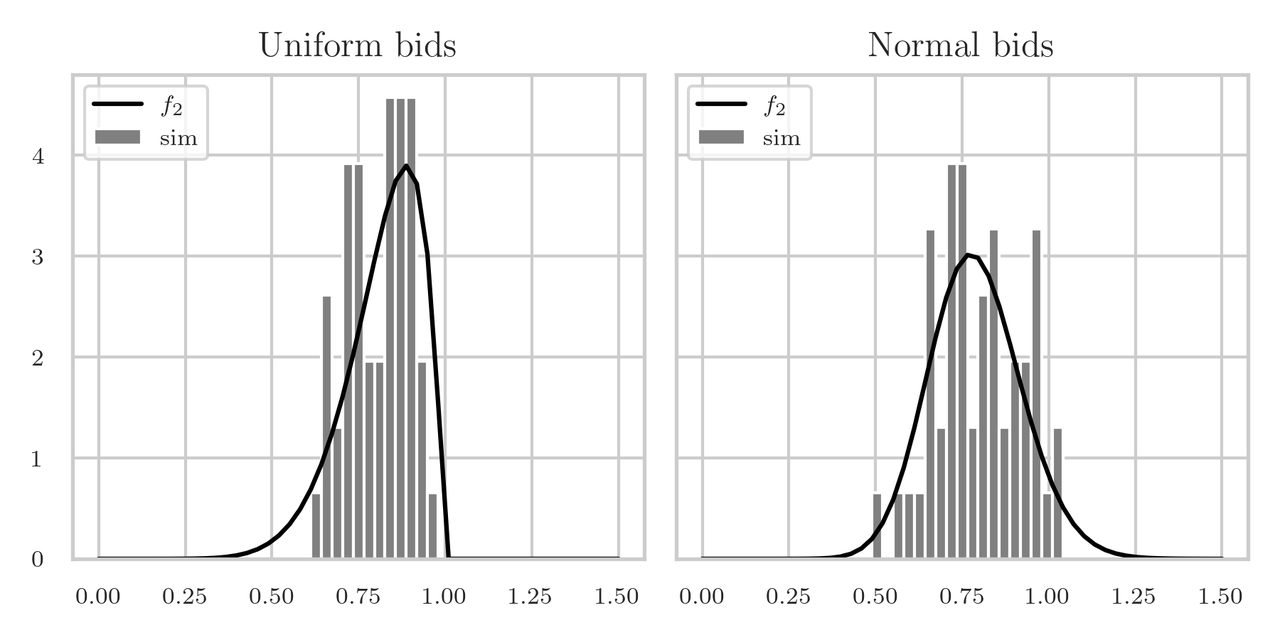

We print three checks: the size of the rejection set is \(17\), as it should, the counter counted \(19 = 35 - 17\) different outcomes, and we see \(10^5\) samples in total. The difference between the children that win the most and the least is about 900, i.e., about \(1\%\) for a sample size of \(10^{5}\) throws. Is this large? To provide some context, we can compare this with a simulation of uniform numbers on \(\{0, 1, \ldots, 18\}\).

import numpy as np

rng = np.random.default_rng(1)

N = 100_000

X = rng.integers(19, size=N)

bins = np.bincount(X)

print(f"{min(bins)=}, {max(bins)=}")

min(bins)=5144, max(bins)=5363

This variation between the numbers that occur the most and least often is quite a bit smaller than the variation under the monkey strategy. We can do further statistical tests, but for the moment I simply conclude that, under the monkey-typing strategy, the children don't have uniformly distributed winning chances.

Still I find it interesting to analyze how many throws this monkey strategy needs on average tof inish, in particular how it compares to the acceptance-rejection policy designed in 1. If it slower, then the policy to use (multiple) rounds of three throws times is better in all respects, but if the monkey policy is faster, we might make a trade off between fairness and speed. Note that this is a common theme in optimization: nearly always there are multiple objectives that we like to minimize or maximize, but we cannot perform optimally on all dimenstions at the same time. If so, we need to make a choice which objective we prefer over another.5

Returning to the analysis of the average number of throws it takes the monkey to select a winner, consider the code below. The next exercise asks you to explain some parts of it.

T = 0

for _ in range(N):

last_two = (rng.integers(6), rng.integers(6))

T += 2

while last_two in reject:

last_two = (last_two[1], rng.integers(6))

T += 1

print(f"{T/N=}")

T/N=2.94335

Explain why T counts the total number of throws for N simulations.

Solution

We add \(2\) to capture the first two throws for each new game. Then we add a throw for each time last_two lies in the rejection set, hence did not lead to a winner.

As T counts the total number of throws for \(N\) games, T/N is the average number of throws per game.

Apparently, this lies just a bit below \(3\).

In summary, although unfair, the monkey policy needs less throws on average than the best acceptance-rejection policy so far, as this already needs three throws for the first round.

4. An optimal strategy

In the above we learned that when the class contains \(N=19\) children, the monkey strategy, which prescribes to continue throwing the die until a winning pattern emerges, does not give a fair outcome. Hence, for the moment, by 1 the best fair algorithm for \(N=19\) seems to throw three times: if that gives a winner, stop; otherwise throw three times again, and continue with rounds until there is a winner.

However, there is a strategy that is fair and minimizes the expected number of throws at the same time. This strategy we discuss now. Although a bit harder to understand, it's an elegant interplay between probability and number theory.

In the sequel we write \(Z\sim\Unif{\{0, 1, \ldots, n\}}\) to say that a rv \(Z\) is uniformly distributed on the set \(\{0, 1, \ldots, n\}\). We also write \(Z|A\) to denote a rv \(Z\) given that the event \(A\) materialized.

Let us first estimate the number of throws needed for a class with \(N=20\) children; this is somewhat simpler than the case with \(N=19\).

Consider the following code, where \(X\) is an iid uniform rv on \(\{0,\ldots,5\}\) corresponding to the throw of the die.

The line t % 20 computes the remainder of t after dividing by \(20\).

import numpy as np

rng = np.random.default_rng(1)

t = 0

while t < 16:

X = rng.integers(6)

t = 6 * t + X

t = t % 20

Explain that \(t_{1}\sim \Unif{\{0, \ldots, 5\}}\), \(t_{2}\sim \Unif{\{0, \ldots, 35\}}\), and \(t_{i}\sim \Unif{\{16,\ldots 95\}}\) for any throw \(i\geq 3\). Then explain why \(t\sim \Unif{\{0, 1, \ldots, 19\}}\) when the algorithm terminates.

Solution

We start with \(t_{0}=0\). After the first throw

\begin{equation*} t_{1} = 6 t_{0} + X_{1} = X_{1} \sim \Unif{\{0, \ldots, 5\}}. \end{equation*}After the second, the rv \(6t_{1} \sim \Unif{\{0, 6, 12, 18, 24, 30\}}\). And thus, \(t_{2} = 6t_{1} + X_{2} \sim \Unif{\{0, 1, 2, \ldots, 35\}}\). If \(t_{2} \geq 16\), then \(t_{2}\in \{16, 35\}\). But in this case \(t_{2} | t_{2} \geq 16\) is uniform on a set with 20 elements. Thus, by taking the remainder \(t_{2} \pmod{20}\) we get a uniform rv on \(\{0, 1, 2, \ldots, 19\}\). If, on the other hand, \(t_{2} < 16\), then \(t_{2} | t_{2} < 16 \sim \Unif{\{0, 1, \ldots, 15\}}\). A third throw then gives \(t_{3} = 6t_{2}+ X_{2} \sim\Unif{\{0, 1, 2, \ldots, 95\}}\). When \(t_{3}\in \{16, 95\}\) we can divide by 20 and take the remainder (observe that the set \(\{16, 17,\ldots, 95\}\) has 80 elements). And, when \(t_{3}\leq 15\), throw again, and so on.

Why does this algorithm minimize the expected number of throws to obtain a rv uniform on \(\{0, 1, \ldots, 19\}\)?

Solution

After every throw, the set that requires another throw is minimal. Specifically, of the set \(\{0, 1, \ldots, 95\}\) we can at most remove 80 elements to guarantee a uniform drawing after dividing by 20 and taking the remainder.

The above two exercises explain the threshold \(16\) of the algorithm for \(N=20\). Now that we understand this, we can try to generalize the algorithm to other values for \(N\), such \(N=19\). Consider the following update rule for \(i=1, 2, \ldots\), \(t_0=0\), and \(X_1\sim\Unif{\{0, \ldots, 5\}}\),

\begin{equation*} t_i = 6 t_{i-1} + X_{i}. \end{equation*}Take \(N=19\). After the second throw, \(t_{2}\sim \Unif{\{0, 1, \ldots, 35\}}\). In view of the above, what subset of \(\{0, 1, \ldots, 35\}\) can we use as an acceptance set to generate a rv \(\sim \Unif{\{0, \ldots, 18\}}\) from \(t_{2}\)? What should be the rejection set for \(t_{2}\)? Finally, why should we replace the threshold \(16\) for the case \(N=20\) by \(17\) for \(N=19\)?

Solution

We don't have to rethrow when \(t_{2}\in \{17, 18, \ldots, 35\}\), as this set contains \(35 - 16 = 19\) elements. However, when \(t_{2}\) lies in \(\{0, 1, \ldots, 16\}\), we need to throw again. Thus, the threshold for \(t_{2}\) should be \(17\) rather than \(16\).

For \(N=19\), we should change the acceptance threshold to 7 after the third throw. Why?

Solution

As \(t_2\) is maximally \(16\), the largest value of \(t_{3}\) is \(6 \times 16 + 5 = 101\). To make the probability of a rethrow as small as possible, we take \(7\) as a threshold since \(101 - 5\times 19 = 6\). The event \(\{7, 8, \ldots, 101\}\) contains \(95 = 5\times 19\) elements, so on this subset we can divide by \(19\) and take the remainder to get a uniform rv on \(\{0, 1, \ldots, 18\}\).

Notice that for \(N=19\) the threshold is not the same for each throw, i.e., 17 for the second and 7 for the third throw.

Explain again why for \(N=20\) the threshold remains \(15\).

Solution

\(N=20\) is a special case. We have seen earlier that \(t_2\) is maximally \(15\) in this case. But then, \(t_{3} = 6 \times 15 = 95\). Removing the largest possible acceptance set containing \(4\times 20 = 80\), we see that \(6\times 15 + 5 - 4 \times 20 = 15\). Thus, the thresold \(15\) is a fixed point.

Clearly, to deal with cases such as \(N=19\) we need to modify the above algorithm. Here is a generalized version.6

def select_uniformly_with_a_die(N):

n = r = 1

t = 0

while t < r:

X = rng.integers(6)

t = 6 * t + X

n = 6 * r

r = n % N

return t % N

What sequence of (n,r) does select_uniformly_with_a_die produce for the cases \(N=2\), \(N=3\), and \(N=6^{3}=216\) (or any other power of 6)?

When does it terminate?

Solution

For \(N=2\), starting with n = r = 1, t = 0, after one throw (n,r) = (6,0), hence \(t_{1} = 6t_{0}+X = 6\cdot 0 + X \geq 0 = r\).

Thus, the algo terminates, and the winning child is \(t_{1}= X \pmod{2} \in \{0, 1\}\).

For \(N=3\) it works the same: we have that \(t=X \pmod{3} \in \{0, 1, 2\}\).

For \(N=6^3=216\), we see that (n, r) progresses as \((1,1) \to (6,6) \to (36, 36) \to (216, 0)\).

Since now \(r=0\), the algorithm necessarily terminates after three steps.

The same questions, but now for \(N=4\). With this, show that \(\E Y = 4/3\).

Solution

For \(N=4\), (n, r) develops as \((1,1) \to (6, 2) \to (12, 0)\).

As after the first throw \((n,r)=(6,2)\), the probability to accept the outcome is \((n-r)/n = 4/6\), and to reject is \(r/n = 2/6\).

After the second throw \((n,r)=(12,0)\), so any outcome will be accepted, and the algorithm terminates

Hence, the expected number of throws is \(E Y =\frac{4}{6} + 2\cdot \frac{2}{6} = \frac{4}{3}\).

Explain that \(\E Y = 72/35\) for \(N=7\). Compare with 1.

Solution

For \(N=7\), the tuple (n, r) progresses as \((1,1) \to (6, 6) \to (36, 1)\to (6, 6) \to \) and so on. Hence,

In other words,

\begin{equation*} \E Y = 2 + \frac{1}{36}\E Y \implies \frac{35}{36}\E Y = 2 \implies \E Y = 72/35. \end{equation*}Why does this algorithm work for general \(N\)?

Solution

The variable \(r\) contains the remainder of \(n\) after dividing by \(N\). The largest that \(t\) can become after a new iteration is \(6r + 5\), while the smallest value of \(t\) is \(0\), which we get after successive outcomes of \(X=0\). Thus, \(t\in \{0, 1, \ldots 6r + 5\}\). This set contains \(n=6r\) elements. By taking \(r\) to \(n \pmod{N}\) we minimize the size of the rejection set for the next throw.

Let us test this generalized algorithm numerically. Here is the code.

count = collections.Counter()

for _ in range(num_runs):

s = select_uniformly_with_a_die(19)

count[s] += 1

most = count.most_common()[0][1]

least = count.most_common()[-1][1]

mean = num_runs // 19

print(f"{len(count)=}, {count.total()=}")

print(f"{least=}, {mean=}, {most=}")

len(count)=19, count.total()=100000 least=5144, mean=5263, most=5434

Think about the simplest possible test of the correctness of this algorithm. Does the output satisfy this test?

Solution

We see that at least all children can become a winner because count has 19 elements..

If we compare this output to our earlier results, the difference between the least and most winning child is reasonable. In fact, comparing the occurrences, we see that our algorithm performs very well!

The last step is to estimate the expected number of throws \(\E Y\) required for the case \(N=19\). First, however, we consider \(N=20\) because in this case we can obtain a simple closed-form expression for \(\E Y\).

For \(N=20\) explain that

\begin{align*} \E Y &= 2 + \frac{16}{36} \E Z, & \E Z &= 1 + \frac{16}{96} \E Z. \end{align*}Hence \(\E Z = 6/5\) and \(\E Y = 2.5333\).

Solution

We need at least 2 throws. When \(t < 16\), which happens with probability \(16/36\), we need to rethrow. The rv \(Z\) is the number of throws, if we need to rethrow. Since \((r, n)=(16, 96)\),

\begin{equation*} \E Z = 1 + \frac{16}{96} \E Z \implies \E Z = 1 + \frac{1}{6}\E Z \implies \E Z = \frac{6}{5}. \end{equation*}To compute \(\E Y\) for general \(N\) we can slightly modify the above algorithm.

Explain the algorithm below.

Solution

The overall algorithm specifies to continue after iteration \(i\) when \(t < r_{i}\), and otherwise to stop. The probability per iteration to continue is \(r_{i}/n_{i}\). Thus, the probality to survive for \(i\) rounds is \(\alpha_i = \alpha_{i-1} r_i/n_i\). Adding up these probabilities gives us \(\E Y\).

Numerically we stop when \(\alpha_i \leq \epsilon\), with \(\epsilon\) some small number.

N = 20

n = r = 1

alpha, eps = 1, 1e-3

EY = 0

while alpha >= eps:

EY += alpha

n = 6 * r

r = n % N

alpha *= r / n

print(f"{n=:3d}, {r=:3d}, {r/n=:2.4f}, {alpha=:2.4f}, {EY=:2.4f}")

n= 6, r= 6, r/n=1.0000, alpha=1.0000, EY=1.0000 n= 36, r= 16, r/n=0.4444, alpha=0.4444, EY=2.0000 n= 96, r= 16, r/n=0.1667, alpha=0.0741, EY=2.4444 n= 96, r= 16, r/n=0.1667, alpha=0.0123, EY=2.5185 n= 96, r= 16, r/n=0.1667, alpha=0.0021, EY=2.5309 n= 96, r= 16, r/n=0.1667, alpha=0.0003, EY=2.5329

This seems to converges to \(2.533\). The last task is to run the code for \(N=19\). This gives \(\E Y=2.509\). Comparing this to the monkey strategy of 1 we see that this algorithm does better on all respects: it is fair and requires less throws on average.

And now two final questions.

What is the relation between this good algorithm and the monkey-typing strategy, and what is the major difference?

Solution

Both policies start with throwing twice, and then continue with single throws to decide to stop or not. So, both policies don't work with rounds of throws. The difference is in the acceptance set. In particular, for the good policy, this set is dynamic, i.e., depends on the value of \((n,r)\).

Can it ever happen for \(N=19\) and a \(6\) sided die that the rejection set becomes empty? Recall, when \(N=4\), the rejection set is empty after two steps, since \(r_2=0\).

Solution

No, this is impossible. Take any \(r\in \{0,1,\ldots, 17\}\), and set \(n=6r\). Then \(n\) and \(19\) are co-prime, in other words, the greatest common divisor of \(n\) and \(19\) is \(1\). This implies that \(r = n \pmod 19 \in \{0, 1,\ldots, 17\}\).

5. Summary

By studying the above toy problem we covered many nice and useful ideas. We developed acceptance-rejection strategies to select a winner. Then we tried to speed up this algorithm to the monkey algorithm, but this algorithm turned out to be unfair. However, by improving the idea, we were able to find a fair and optimal algorithm. This final algorithm is not quite trivial, but effective and suprisingly short.

In the next section we'll look at the monkey-typing strategy. This will provide us with a number of useful tools, in particular first-step analysis.

Footnotes:

It seems like an experiment in which the aim is to prove that a new medicine works. If the data shows that the medicine does not work, just repeat.

I frequently mess up these rvs.

There is subtle detail for this idea to work, \(\E Y\) should be finite. We will ignore such mathematical points in the sequel.

Ask ChatGPT to explain code you don't understand.

Finding good objectives is most often not a mathematical problem, in fact, some (or much) wisdom is needed to understand when to use mathematics, and when to stop using mathematics.

We assign the outcome of the throw of the die to the variable \(X\) just for clarity.